In the rapidly evolving AI ecosystem, two dominant paradigms are emerging, often used interchangeably in conversations but fundamentally distinct in their capabilities and system design. On one side is Generative AI, primarily driven by large language models (LLMs) that predict and produce outputs based on contextual prompts. On the other side is Agentic AI, a systems-level approach that introduces autonomy, memory, feedback loops, and task-oriented planning.

The distinction is not purely academic. For developers, the paradigm they choose directly impacts architecture, latency, operational complexity, and ultimately, how well the system aligns with real-world use cases.

Generative AI refers to machine learning models, especially large transformer-based architectures, that are trained to generate data. These models predict the next element in a sequence, whether it's a token in a sentence, a pixel in an image, or a line of code. The hallmark of generative AI is prompt in, output out. The inference cycle is short, stateless, and driven by learned probabilistic associations.

For example, a generative code assistant may autocomplete a function based on the immediate context, leveraging learned patterns from billions of code samples. However, once the output is generated, the system retains no awareness of what was done unless the input is augmented with historical data.

In terms of system architecture, generative AI models often operate within a single forward pass, without memory persistence, tool orchestration, or environmental feedback. They are best used when the task is well-defined, static, and creative in nature.

Agentic AI introduces a higher level of abstraction. It views intelligent systems not as mere pattern matchers, but as autonomous actors capable of perceiving a goal, planning a course of action, interacting with external systems, monitoring execution, and modifying plans based on feedback.

An agentic system is fundamentally stateful. It maintains internal memory, tracks previous interactions, and considers intermediate steps before producing final outputs. It can interact with APIs, file systems, databases, deployment pipelines, and more, adapting its behavior in real time.

These agents often consist of multiple modules: a planner to break down goals, a tool executor to interface with environments, a controller to orchestrate steps, and a memory module to track state. In contrast to the one-shot nature of generative models, agentic systems operate over time, often looping through perception, planning, execution, and revision.

Generative AI models are limited when the task requires sustained attention across multiple steps. Their token-based inference model lacks the mechanism to monitor or revise past actions. Developers often use techniques like chain-of-thought prompting or external state injection to mimic multi-step reasoning, but this is a patchwork solution.

In contrast, agentic systems are built to plan, decompose, and execute. When faced with a complex task, such as migrating a codebase from one framework to another, an agent can:

This kind of execution demands long-term memory, conditional logic, and asynchronous planning. Agentic AI frameworks such as LangGraph, CrewAI, and AutoGen allow developers to build workflows where each decision informs the next, making these systems more reliable for tasks like:

Generative models fail in such workflows because they cannot hold execution context across steps or adapt to intermediate results without manual intervention.

Generative models are static predictors. They do not perceive environments. For instance, when generating code, they do not know if a referenced file exists or whether an API call has succeeded. To bridge this, developers often chain LLMs with external tools, relying on wrappers, scripts, or orchestrators.

Agentic AI treats environment interaction as a first-class construct. Agents are designed to observe the external world, act accordingly, and adapt to changes in the environment. They can:

From a developer’s perspective, this is transformative. Imagine writing an agent that detects failing unit tests, identifies the root cause using log analysis, patches the relevant module, re-runs the tests, and commits the fix with a summary PR. This level of context-awareness and interaction is only feasible with agentic systems.

Feedback is a key mechanism in intelligent behavior. In classical control systems, feedback loops drive stability and robustness. Generative models, however, are fundamentally open-loop systems. They produce output and move on. Any feedback must be manually injected back through re-prompting or chaining.

Agentic AI systems are closed-loop architectures. They process the outcome of each action, determine success or failure, and choose the next step accordingly. Error handling is built into the control logic, allowing retries, fallbacks, alternate strategies, or escalation.

For example, an agent tasked with ingesting a CSV from an external source might:

This iterative behavior is not only more robust but also essential for production-grade AI systems where reliability, transparency, and observability matter. Developers need agents that can debug themselves, handle exceptions, and learn from execution traces.

LLMs operate on a prompt-response cycle. They do not natively understand goals. Even advanced prompting strategies, such as few-shot learning or role instruction, only simulate intent temporarily. The model does not track its progress toward a defined objective.

Agentic systems, on the other hand, are structured around goals, not prompts. A developer can specify a high-level objective, such as “generate a report of the top 100 error-producing endpoints in the last 7 days,” and the agent can:

The agent does not need to be prompted for each subtask. It uses planning modules and context memory to reach the goal. This aligns with how software engineers reason about tasks — not as isolated text completions, but as logical sequences of stateful actions progressing toward a deliverable.

Tool use is a major limitation of generative models. While they can generate tool-specific syntax or suggest API calls, they cannot execute those actions, interpret results, or adjust based on system state.

Agentic AI is explicitly designed for tool affordance and execution. An agent can have a registry of tools (e.g., shell commands, REST endpoints, cloud SDKs) and decide at runtime which tools to invoke. Developers can expose custom toolkits, define input-output schemas, and embed constraints that guide tool usage.

This makes agentic systems ideal for automating workflows that touch multiple systems, including:

Developers benefit from clear tool chaining, observability, and type-safe control over tool invocation. This enables scalable, secure, and composable AI behavior that general LLMs cannot deliver.

Generative models are powerful for lightweight, creative, or exploratory tasks. They shine in scenarios where:

This includes use cases such as:

For developers, generative AI remains essential in prototyping, inspiration, and lightweight automation. However, its limitations become apparent as soon as task complexity, execution depth, or environmental control comes into play.

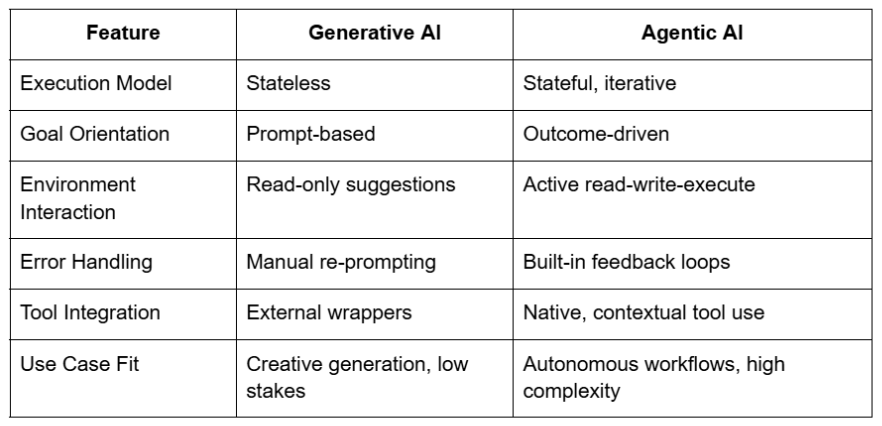

When selecting between generative and agentic AI, developers must align system capabilities with real use case demands. The following matrix can help guide the decision:

For example, if you are building an AI-powered test generator, a generative model may suffice. However, if your goal is to have an autonomous test executor that writes, runs, validates, and modifies tests in a CI/CD pipeline, an agentic system is indispensable.

The shift toward Agentic AI represents a natural evolution in how we design intelligent systems. For developers, this is more than a trend. It signals a move from prompt engineering to system engineering. From one-shot completions to continuous reasoning. From passive suggestion to active execution.

As agentic frameworks mature and integrate seamlessly with developer tools, the ability to build autonomous, robust, and goal-aligned systems becomes not just feasible but essential.

Developers who embrace agentic paradigms will be the ones who lead in building the next generation of intelligent software systems.