Modern software systems operate under demanding performance constraints where milliseconds, or even microseconds, matter. Whether you are building a high-throughput backend API, a low-latency game engine, or a distributed inference system for machine learning, identifying and optimizing critical path code is essential to achieving performance at scale. The critical path code is the portion of execution that directly determines the maximum latency, throughput, or resource consumption of your application. Unlike general code optimization, which may improve performance arbitrarily, targeting the critical path yields guaranteed systemic improvements.

In this blog, we explore how static analysis and dynamic analysis, when combined with modern AI techniques, can automatically detect, prioritize, and remediate performance bottlenecks in critical path code. This process minimizes human guesswork, enables intelligent prioritization, and introduces automation to what was once a manual, resource-intensive process. We cover how each type of analysis works, how AI enhances them individually, and what happens when these approaches are fused for intelligent, continuous optimization.

In software engineering, the critical path code refers to the minimal set of operations that define the total execution time of a given task. Optimizing this path has a multiplicative effect on the system's performance characteristics. Any function, API call, loop, or conditional logic that occurs in a frequently executed path, particularly one that is time-sensitive or blocking downstream operations, falls into this category.

Developers often miss the critical path because it changes with deployment conditions, input distribution, and runtime environment. This makes it ideal for a hybrid approach combining static and dynamic introspection, guided by AI models that can reason across the stack.

Static analysis refers to analyzing code artifacts without executing the program. It uses program structure, control flow, data flow, and type information to reason about possible execution paths and identify structural inefficiencies or unsafe patterns. Tools build representations like Abstract Syntax Trees (ASTs), Control Flow Graphs (CFGs), and call graphs.

Common static analysis tasks include:

Static analysis is powerful but inherently conservative. Because it lacks concrete runtime inputs, it assumes worst-case scenarios and may generate false positives or miss dynamic bottlenecks entirely. It cannot determine the runtime frequency or performance cost of paths unless paired with historical telemetry or instrumentation data.

With the integration of AI, particularly large language models and graph-based neural networks, static analysis moves from being rule-based to context-aware. Here is how AI elevates static analysis capabilities:

These enhancements reduce the cognitive load on developers, flagging only the most impactful findings while contextualizing them in terms of runtime performance.

Dynamic analysis is the process of analyzing a program’s behavior during execution. This approach yields runtime performance data, including CPU and memory usage, system calls, thread scheduling, I/O latency, and network activity. Tools like perf, eBPF, Valgrind, DTrace, JFR, and others instrument binaries or inject tracing mechanisms to collect performance metrics during actual workloads.

Typical outputs include:

While dynamic profiling is accurate, it produces high-volume, low-signal data. Developers need to sift through thousands of traces and logs to understand what matters. Moreover, manual interpretation often leads to incorrect attributions of bottlenecks, especially when caused by external services or asynchronous operations.

AI brings structure, pattern recognition, and prioritization to dynamic data streams, making it easier to extract meaningful insights.

One of the most transformative capabilities is the ability to correlate static representations with dynamic runtime metrics. AI models enable this by associating function hashes, source lines, or control flow segments with observed traces.

AI models can now score code regions not just by static complexity or cyclomatic depth, but also by:

This multidimensional prioritization allows for laser-focused remediation of true performance bottlenecks instead of wasting time on micro-optimizations with negligible ROI.

Modern AI agents integrate into development workflows via IDEs or CI pipelines. Once critical path regions are identified, these agents can:

Such agents reduce feedback loop latency from days to minutes and enable a self-healing feedback loop where software learns to optimize itself continuously.

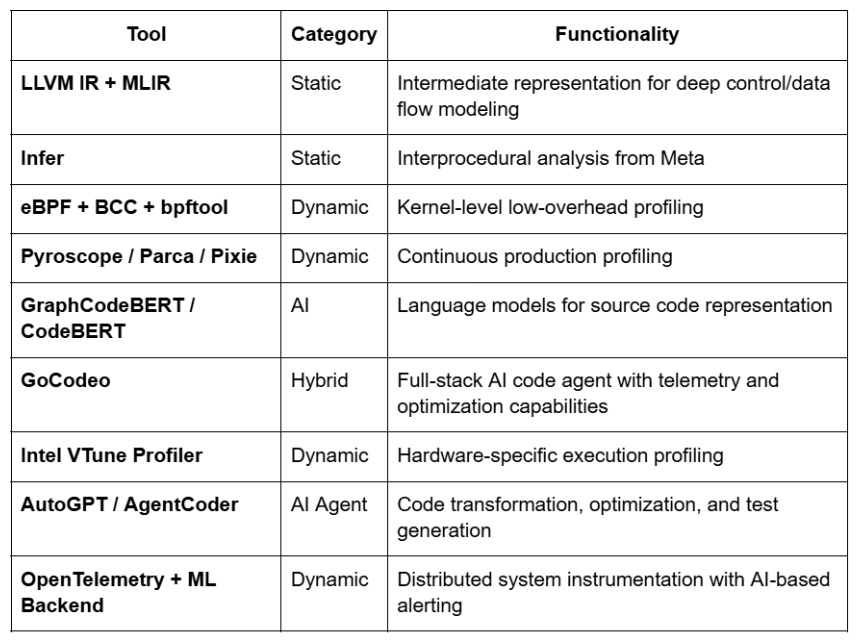

Here is a non-exhaustive but highly practical list of tools used across the software industry for static, dynamic, and AI-assisted optimization tasks.

The convergence of static analysis, dynamic profiling, and AI models introduces a new paradigm of intelligent performance engineering. Critical path code can now be identified with greater precision, optimized with less developer effort, and monitored continuously in real-world conditions. Instead of relying solely on intuition or manual inspection, development teams can leverage AI-driven insights to ship faster, safer, and more efficient systems.

This new class of AI-powered developer tooling is not just augmentative but increasingly autonomous. With AI agents operating across the software lifecycle, critical path optimization becomes continuous, contextual, and increasingly cost-efficient.

If you are working on latency-sensitive systems, mission-critical backends, or performance-focused products, it is time to integrate AI-powered static and dynamic analysis into your development pipeline.