In the evolving software development landscape, speed and reliability are non-negotiable. Continuous Integration and Deployment (CI/CD) practices have transformed how teams ship code, enabling developers to push features, bug fixes, and enhancements rapidly. However, with increasing codebase complexity, distributed teams, and the demand for faster release cycles, traditional CI/CD workflows encounter scalability bottlenecks. AI coding tools are now positioned as pivotal enablers of intelligent, scalable, and automated CI/CD pipelines.

This blog explores how AI coding tools integrate into the CI/CD lifecycle, optimize key processes, and drive efficiency through intelligent automation. We will delve into the architecture, technical implications, and real-world tooling developers can use to level up their DevOps practices.

Continuous Integration refers to the practice of frequently merging code changes into a shared repository. Developers commit code in short iterations, and each commit triggers a series of automated actions such as compilation, static analysis, and unit testing. This approach detects integration issues early, ensures that changes are compatible with the shared codebase, and improves collaboration across teams.

Continuous Deployment builds on Continuous Integration by automating the delivery of code to production environments. Once code passes all tests and validations, it is automatically pushed to staging or live environments without manual intervention. This eliminates the traditional bottlenecks of release approvals, reduces time to market, and enables a steady flow of updates to end-users.

CI/CD pipelines often suffer from issues such as flaky tests, unoptimized builds, inadequate test coverage, misconfigured environments, and high cognitive load on developers. As the complexity of applications increases, so does the need for smarter, context-aware systems that can reduce errors, predict failures, and optimize workflow without constant human intervention. This is where AI coding tools prove transformative.

AI-driven coding tools are not limited to code generation or auto-completion. They integrate into multiple phases of the DevOps lifecycle, extending value far beyond the editor. Key integration points include:

AI-powered IDE integrations like GitHub Copilot, GoCodeo, and AWS CodeWhisperer enhance developer productivity at the source level. These tools use large language models trained on public and private codebases to suggest contextually accurate code completions, refactorings, and even function implementations.

By catching syntax errors, incorrect API usage, and even missing edge cases during authoring, AI tools reduce the volume of failed builds and test regressions. Suggestions are often aligned with common design patterns and security practices, enabling developers to write higher quality code from the outset.

AI suggestions can be tuned to follow organizational style guides, linting rules, and internal libraries. This consistency reduces friction during code reviews, simplifies debugging, and streamlines the onboarding process for new developers.

AI models can analyze pull requests, automatically suggest changes, detect potential vulnerabilities, and flag inconsistencies that traditional linters or rule-based systems may overlook. This drives faster review cycles and reduces the load on human reviewers.

One of the most time-consuming yet critical aspects of CI is testing. Writing comprehensive unit and integration tests manually is resource-intensive. AI tools are now capable of generating test cases based on the code context, control flow, and inferred specifications.

By analyzing function signatures, dependencies, and logic branches, AI tools like Diffblue Cover and GoCodeo can auto-generate test suites in languages such as Java, Python, and TypeScript. These tests are structurally sound and aim to cover both positive and negative paths, thereby increasing the robustness of the CI process.

Rather than relying solely on line or branch coverage metrics, AI tools model behavioral coverage. This means they can detect untested edge cases, misaligned test intentions, and suggest new test cases that target high-risk areas based on past commit history and defect data.

AI can correlate test failures with recent changes, using historical commit-test failure mappings. It then surfaces predictions about where regressions are likely to occur, guiding the test engine to prioritize execution order based on risk probability.

CI builds often become bloated as projects grow in size and complexity. AI models can predictively optimize build execution by modeling code dependencies, file change patterns, and prior build failures.

AI tools analyze the dependency graph of large monorepos or microservices to detect unaffected modules during code changes. This enables partial builds rather than triggering full pipeline executions. It reduces resource consumption and execution time significantly.

Traditional caching mechanisms use timestamps and file hashes. AI caching layers consider contextual data such as build history, change patterns, and semantic diffs to decide what artifacts are safe to reuse. This reduces unnecessary cache invalidations and improves hit rates.

By observing trends in build failures across teams, branches, and environments, AI tools forecast potential build failures before they happen. Developers are proactively warned about fragile modules or misconfigured build scripts that could break the pipeline.

As code moves from test environments to staging and production, deployment checks become critical. AI enhances these checks by leveraging telemetry, logs, config files, and user behavior data to determine whether code is truly deployment-ready.

AI agents can simulate the effect of deploying a change across environments, score risks associated with performance degradation, broken dependencies, or resource misallocation, and prevent critical failures from reaching users.

AI continuously compares configuration files across environments and detects unintentional drifts. This ensures the CD pipeline is deploying into consistent infrastructure states, reducing environment-specific bugs.

Changes to databases, APIs, and data models can break downstream services. AI tools simulate schema changes, validate API compatibility, and generate synthetic data to test migration scripts before they are applied to live systems.

AI continues to add value after deployment by monitoring production systems, analyzing logs, and predicting future issues using observability data.

AI models trained on historical metrics can detect unusual patterns in CPU usage, memory consumption, or latency. They trigger alerts when thresholds are crossed without needing predefined rules.

Using graph-based or transformer-based models, AI tools correlate symptoms across services, link logs with traces, and narrow down the root cause of incidents. This significantly reduces Mean Time to Detect (MTTD) and Mean Time to Resolve (MTTR).

When integrated with incident management tools like PagerDuty or OpsGenie, AI can trigger predefined remediations such as restarting services, scaling nodes, or reverting deployments based on confidence levels and past success rates.

To fully utilize AI in the CI/CD lifecycle, organizations must build an architecture that supports modular, extensible, and data-rich toolchains.

┌────────────────────┐

│ AI Coding Tool │

│ (e.g., GoCodeo) │

└────────┬───────────┘

│

┌───────────────▼────────────────┐

│ Version Control (e.g., GitHub) │

└───────────────┬────────────────┘

│

┌──────────▼────────────┐

│ CI Pipeline (e.g. │

│ GitHub Actions, │

│ Jenkins, CircleCI) │

└──────────┬────────────┘

│

┌───────────────▼────────────────┐

│ AI-Powered Test Engine │

│ (Autogen tests, coverage maps) │

└───────────────┬────────────────┘

│

┌──────────▼────────────┐

│ CD Pipeline (e.g. │

│ ArgoCD, Spinnaker, │

│ GitLab CD) │

└──────────┬────────────┘

│

┌────────▼─────────┐

│ AI Monitoring & │

│ Incident Mgmt │

└──────────────────┘

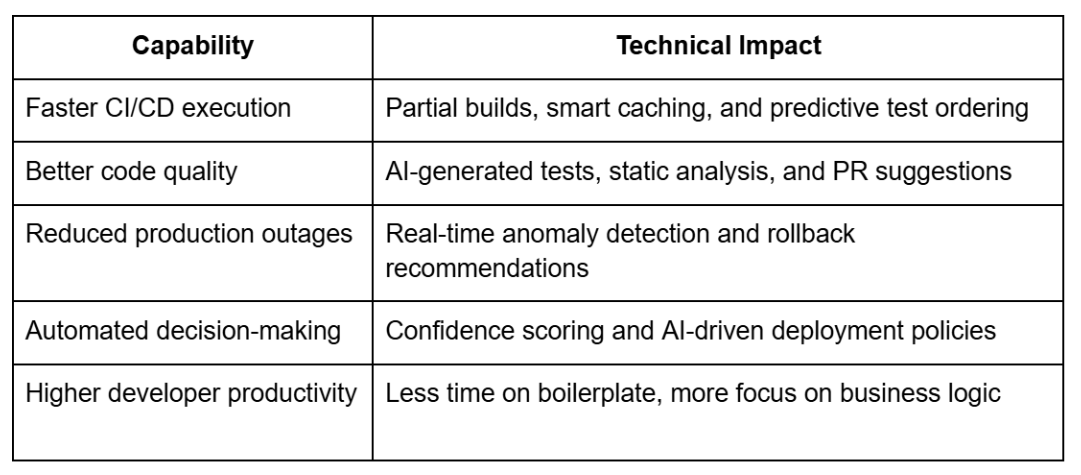

AI coding tools are evolving beyond their initial role as autocomplete engines. They are now core components in intelligent CI/CD pipelines, driving automation, enhancing reliability, and reducing latency across the entire software delivery lifecycle. Developers who embrace these tools gain a significant edge in building scalable, resilient, and high-velocity engineering systems. As adoption matures, the future of DevOps is undeniably AI-augmented, where pipelines are not just automated, but adaptive, predictive, and autonomous.