In today’s evolving development landscape, integrating AI into the software delivery lifecycle is no longer a luxury but a necessity for teams aiming to achieve high efficiency, lower failure rates, and shorter release cycles. Developers are increasingly tasked with delivering robust, scalable, and secure applications at a rapid pace. To keep up with this demand, the incorporation of AI across the entire development pipeline, from the integrated development environment (IDE) to CI/CD pipelines, has become critical. This blog provides a deeply technical, phase-by-phase guide to building a continuous AI-powered development pipeline that embeds intelligence and automation into every stage.

Modern IDEs have transformed into intelligent development companions that proactively assist developers throughout the coding process. By integrating AI capabilities directly into the IDE, developers can streamline their workflows, enforce code quality standards, and reduce cognitive load.

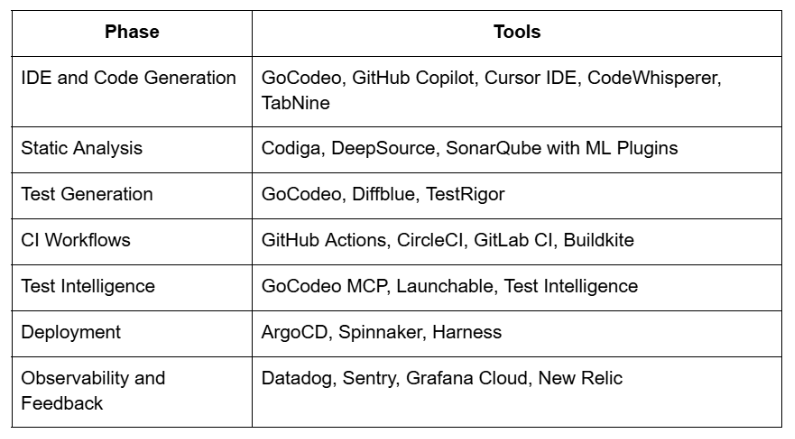

AI-powered code completion tools such as GoCodeo, GitHub Copilot, Amazon CodeWhisperer, and TabNine use large language models (LLMs) trained on vast codebases to generate code snippets, boilerplate structures, and function implementations. These tools provide context-aware suggestions that adapt to the current programming language, framework, and repository architecture.

For example, GoCodeo leverages abstract syntax tree (AST) analysis and project structure awareness to generate module-specific suggestions. Instead of merely inserting boilerplate code, the AI model considers file relationships, architectural layers, naming conventions, and style guides, ensuring higher semantic relevance. Additionally, the generation can extend across files, helping developers scaffold entire feature modules within seconds.

AI agents embedded in the IDE can perform real-time static analysis to identify code smells, anti-patterns, and refactoring opportunities. These systems use rule-based inference, machine learning classifiers, and reinforcement feedback to suggest changes. For instance, in a microservices project, the AI might suggest splitting a monolithic module into smaller independently deployable services based on cohesion-coupling metrics.

Tools like Refact.ai or GoCodeo's internal refactoring module detect complex patterns like duplicated logic across services, inefficient data structures, or unscalable method chains. These are not simple linters, but context-sensitive refactoring engines with capabilities like automatic function decomposition, loop unrolling, interface abstraction, and dependency inversion.

Intelligent IDEs now provide seamless integration with version control systems. Tools like GoCodeo and Cursor IDE offer commit message generation based on diff summaries, which helps in maintaining clean and meaningful version histories. These systems can classify commits into feature, fix, chore, or refactor using natural language processing and machine learning classification algorithms.

Moreover, certain platforms can auto-generate changelogs, increment semantic versions, and tag release branches based on commit semantics, ensuring synchronization between development and CI triggers.

Before code reaches the CI/CD pipeline, AI can be used to validate changes and prevent broken builds. Pre-commit validation is the first line of defense in maintaining code quality and security.

Traditional static analyzers often flood developers with warnings, most of which may not be actionable. Modern AI-enhanced analyzers, such as DeepSource, Codiga, and SonarQube with ML plugins, aim to prioritize and contextualize issues. These systems classify issues based on severity, likelihood of impact, and historical patterns observed across similar repositories.

For example, they may downgrade a rarely executed log-level misconfiguration but highlight a missing null check inside a frequently invoked data transformation function. These tools use Bayesian inference models, code vector embeddings, and repository graph analysis to derive issue relevance.

Writing exhaustive tests is often neglected due to time constraints. AI models trained on test patterns, functional specifications, and existing test suites can generate high-quality unit and integration tests. Platforms such as GoCodeo, Diffblue, and TestRigor can identify function contracts, boundary cases, and invariants to write tests that cover critical execution paths.

Developers can integrate these tools as pre-commit hooks, allowing for automatic test generation triggered by changes in specific files or modules. This helps achieve higher test coverage and confidence before code is committed to the main branch.

Using AI to monitor and gate commits based on code coverage deltas is an emerging best practice. AI tools can analyze previous builds and determine whether a newly added code path is adequately covered. If a drop in coverage is detected, the pipeline can be configured to block the commit or trigger a secondary review process.

CI systems have evolved from scripted automation to intelligent orchestration platforms. With AI embedded in CI, build, test, and reporting workflows become dynamic and context-aware.

CI pipelines can be optimized using dependency graphs and AI-based schedulers. Tools like CircleCI, GitHub Actions, and GitLab CI can use AI agents to predict build duration, identify bottlenecks, and parallelize jobs efficiently. Build artifacts can be cached intelligently based on historical job success rates and dependency diffing.

For example, GoCodeo’s integration with GitHub Actions uses a custom AI runner that classifies each pipeline job based on build graphs, module dependencies, and recent code changes. This ensures only relevant modules are rebuilt and tested, reducing time and resource consumption.

AI-powered test orchestration platforms like Launchable and GoCodeo MCP use statistical models and historical telemetry to determine which test cases are most likely to fail given a specific code change. This process, known as test impact analysis, can prioritize and run high-risk tests first, providing fast feedback and enabling early detection of regressions.

Developers can configure dynamic test matrices that evolve over time as the AI learns from test outcomes. This dramatically reduces test flakiness, optimizes test suite size, and ensures relevant coverage without overconsumption of compute resources.

AI-enhanced deployment systems extend CI by offering smart release strategies. Platforms like ArgoCD, Harness, and Spinnaker use policy-based decision engines that evaluate system metrics, error budgets, and release histories before approving rollouts.

These systems can automate canary deployments, gradually increasing traffic to new versions while monitoring logs and telemetry for anomalies. If regressions are detected, the AI engine can trigger automated rollbacks and root cause analysis.

Feedback is a cornerstone of continuous delivery, and AI helps transform raw telemetry into actionable insights.

Observability platforms such as Datadog, New Relic, and Sentry have started using LLMs to summarize logs, correlate metrics, and provide anomaly detection. Developers can configure alerts that are not just threshold-based but semantic. For example, instead of triggering on a 500 error spike, the system may alert when a rare error string is detected in a mission-critical service log.

Using NLP and clustering algorithms, these tools identify root causes, suggest remediations, and even correlate new errors with recent commits, closing the feedback loop.

Developer experience and team performance can also be enhanced with AI-generated telemetry dashboards. These platforms collect metrics such as code churn, cycle time, coverage drift, and incident frequency to provide recommendations for process improvements.

By visualizing hotspots in code, unstable test modules, or release frequency trends, teams can identify patterns that impact velocity and quality. AI then assists in refactoring backlogs or rewriting unstable components.

Building a continuous AI-powered development pipeline is not about bolting AI on top of existing processes. It requires architectural thinking, deep integration across stages, and a commitment to continuous learning. From real-time code generation in the IDE to AI-governed deployments and post-release analysis, every stage can be infused with intelligence to accelerate feedback loops, reduce risk, and improve developer experience. The result is a resilient, scalable pipeline that adapts to change and evolves with your codebase.