The surge in AI-powered coding tools has introduced a paradigm shift in how software is written, tested, and deployed. Tools like GitHub Copilot, Amazon CodeWhisperer, and GoCodeo have accelerated developer productivity by offering real-time suggestions, automated test generation, and even full-stack code scaffolding. However, for development teams operating within regulated industries such as healthcare, finance, or critical infrastructure, these tools pose significant concerns around regulatory compliance, verifiability of logic, auditability of code changes, and trustworthiness of automated suggestions.

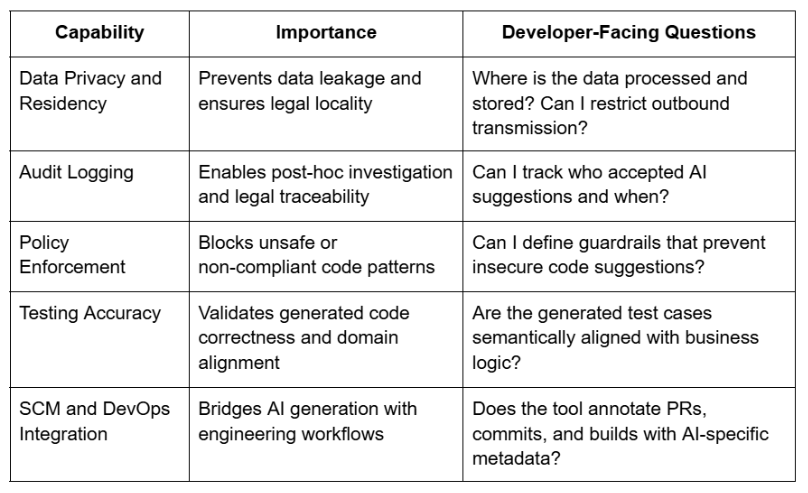

Regulatory mandates such as GDPR, HIPAA, SOC 2, and ISO 27001 impose strict controls around data handling, source code traceability, access logging, and testing accountability. AI-generated code must not only functionally perform, it must also meet legal and procedural requirements. This blog presents an in-depth technical evaluation framework for assessing AI coding tools through the lenses of compliance, automated testing, and traceability, tailored for developers, architects, and DevSecOps professionals.

The introduction of AI into the software development lifecycle amplifies the impact of coding decisions. An erroneous suggestion accepted by a developer may introduce security flaws, data exposure vulnerabilities, or violations of business logic, especially if not caught during manual review. Since AI suggestions are probabilistically generated and influenced by pretraining datasets, they may unintentionally reproduce unsafe or non-compliant patterns.

Organizations subject to data governance laws and software quality frameworks cannot afford to blindly integrate AI tooling without safeguards. Regulatory bodies demand not just functional correctness, but process visibility. Who generated the code, under what policy, and under what constraints? These are no longer academic questions, they are legal and operational imperatives.

When bugs or regressions occur in production, traceability features allow teams to map defects back to their origin. In AI-augmented development environments, this means knowing exactly which lines were suggested by the AI, which model version produced them, who accepted the suggestion, and under what context. Such forensic resolution is mandatory for regulated pipelines.

AI tools vary significantly in how they process and store user code. Some operate purely client-side, others transmit snippets to cloud-based inference APIs, and some retain data to improve model fine-tuning. For compliance:

A compliance-aware AI coding tool should come with support for established security frameworks:

In high-risk environments, developers must have the ability to enforce static and dynamic policies during code generation. Look for tools that support:

For auditability and incident response, it is essential to capture telemetry around AI-generated code. Effective tools should provide:

Automated testing workflows are central to code quality. AI-powered tools that assist with test generation must integrate seamlessly with your build and deploy pipelines. Evaluate:

High-quality test generation depends on semantic understanding, not just syntactic parsing. Advanced tools leverage LLMs to reason about method behavior, dependencies, side effects, and business rules.

Regulatory compliance often mandates specific behaviors, such as encryption of sensitive fields, redaction of PII, and restricted access paths. Evaluate whether the AI tool can:

Developers must ensure that AI-generated tests provide measurable improvements in test coverage and risk mitigation. Tools should provide:

For teams to maintain accountability, it must be explicitly clear which code was written by a human and which was suggested by the AI. Look for capabilities such as:

Some LLMs may replicate code patterns that resemble public repositories, including open source under restrictive licenses. For compliance with IP and license rules:

Post-deployment traceability is critical when diagnosing incidents or breaches. Evaluate how the AI coding tool integrates with observability and monitoring tools:

GoCodeo is an AI coding agent designed for developers building full-stack applications with built-in support for Supabase and Vercel. It offers a structured AI development loop:

GoCodeo integrates with GitHub, offering full traceability of AI-generated changes, tagging each commit with authorship metadata, versioned model identifiers, and change rationale. Tests generated through the agent are CI-executable and come with detailed coverage reports, highlighting any compliance blind spots.

AI coding tools are redefining how software is built, but speed and convenience must not come at the cost of compliance, verifiability, and engineering discipline. For developers working in security-conscious or legally bound industries, integrating such tools demands a rigorous evaluation across data handling, traceability, automated testing, and policy alignment.

As AI agents continue to evolve, the future of coding will be shared between human developers and intelligent machines. Ensuring that this relationship is governed, observable, and accountable is the only sustainable path forward for software development in regulated domains.