As the AI landscape evolves, 2025 has brought significant advancements in how developers interact with AI-powered coding assistants. Two of the most prominent players dominating this space are Claude 3 Opus by Anthropic and ChatGPT-4.5 / GPT-4o by OpenAI. While both models are capable of code generation, debugging, and natural language-based reasoning, their performance across developer workflows is far from identical. For engineers, architects, and toolchain specialists, understanding the deep technical distinctions between Claude and ChatGPT is vital.

This blog aims to offer a comprehensive, developer-centric breakdown of how both tools perform in actual coding environments. Our analysis will span key areas such as architectural underpinnings, real-world reasoning capabilities, language support, IDE and tooling integration, agentic execution, debugging workflows, and ecosystem maturity , all framed through the lens of modern software engineering.

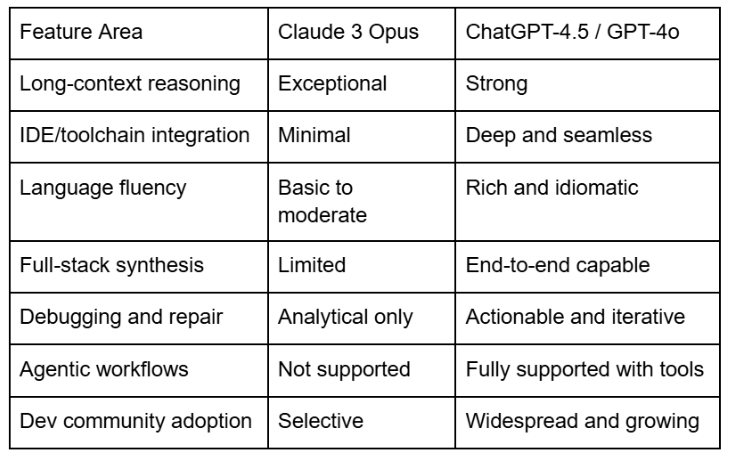

Claude 3 Opus, Anthropic's most advanced model as of 2025, is optimized for large-context reasoning. Built on the principles of Constitutional AI, it performs exceptionally well in understanding complex documents, maintaining structured conversations, and offering nuanced architectural feedback. Claude can handle context windows exceeding 200,000 tokens, making it a strong candidate for reading through lengthy technical documents, multi-file repositories, or verbose logs.

From a developer perspective, Claude’s architecture exhibits strengths in:

However, its weaknesses emerge when transitioning from comprehension to execution. Claude frequently requires precise prompting scaffolds for multistep coding tasks and is less reliable when asked to stitch together complete feature implementations across layers.

GPT-4.5 and GPT-4o, successors to GPT-4-turbo, present a major leap in multimodal, real-time performance. With unified text, code, audio, and image reasoning, GPT-4o is the first to truly function as a real-time agentic assistant. Architecturally, GPT-4o emphasizes:

For developers, this translates to superior performance in dynamic workflows where modular planning, language-specific idioms, and real-time feedback are critical.

Conclusion: Claude leads in comprehension-heavy tasks like reviewing technical specs or RFCs. ChatGPT excels in applied code generation, execution reasoning, and rapid iterative development.

Anthropic has taken a conservative stance when it comes to embedding Claude into traditional developer workflows. As of mid-2025, Claude lacks first-party plugins or deep IDE integrations. Most usage is mediated via third-party wrappers such as Sourcegraph Cody or web-based terminals.

This poses tangible limitations:

While Claude can provide intelligent insights when prompted explicitly with file contents or logs, it lacks the proactive, real-time guidance expected in modern developer tooling.

ChatGPT’s integration into the developer ecosystem is deep and well-engineered. With support for VS Code, JetBrains IDEs, Cursor IDE, and code-native extensions, ChatGPT acts as a fully embedded coding partner. It facilitates:

Its native integration with tools like GoCodeo further enhances ChatGPT's utility as a dev agent, executing full-stack builds, schema migrations, and deployments via AI-driven workflows.

Conclusion: ChatGPT offers a frictionless developer experience within IDEs and terminals. Claude remains tool-agnostic, relying on manual context injection and limited ecosystem exposure.

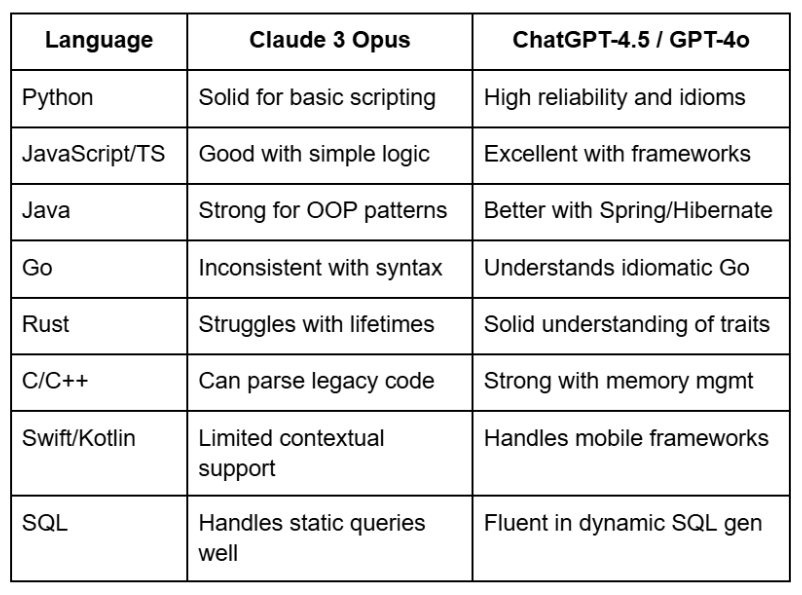

Claude often relies on general pattern-matching to generate code, which may lead to semantically correct but syntactically flawed outputs in niche languages. ChatGPT demonstrates contextual awareness and deeper fluency in idiomatic usage, error handling, and edge cases , especially in dynamically typed languages and full-stack scenarios.

Conclusion: For language-specific expertise, ChatGPT consistently outperforms Claude across backend, frontend, and infrastructure languages.

Modern software development requires reasoning across components: frontend UI logic, backend APIs, database schemas, infrastructure orchestration, and CI/CD automation.

Claude’s reasoning model is impressive when tasked with interpreting high-level blueprints or workflows. It can:

However, Claude does not perform well when required to actively generate code that spans multiple domains (e.g., frontend UI + backend API + database schema). It lacks persistent state memory across multi-file prompts and has no internal capability to simulate agentic planning or task execution.

ChatGPT has matured into a multi-agent execution engine, particularly when paired with tools like GoCodeo or Cursor IDE. Developers can issue high-level tasks such as:

Behind the scenes, ChatGPT orchestrates:

Conclusion: Claude understands the what, ChatGPT builds the how. ChatGPT is production-ready for agentic full-stack automation.

Claude excels in passive diagnostics. It can:

However, Claude’s follow-through is limited. It lacks the mechanism to:

ChatGPT’s debugging capabilities are tightly integrated into developer tooling. It can:

It also adapts based on historical debugging context, making it effective for iterative triaging and test-driven development.

Conclusion: Claude diagnoses. ChatGPT diagnoses and fixes.

Recent developer surveys, GitHub usage trends, and tool adoption reports paint a clear picture:

Across repositories and coding environments:

In 2025, developers looking to understand systems may find value in Claude. But those aiming to build, ship, and maintain code will find ChatGPT far more practical, versatile, and integrated.