At a glance, the differences between Agentic AI and Generative AI may appear superficial, especially to those who have only worked with transformer-based LLMs. However, the underlying computational models, system architectures, and operational scopes of each approach diverge significantly. Developers building real-world systems must understand these core distinctions to make architectural decisions that align with scalability, maintainability, and automation goals.

Generative AI refers to models that generate content in a stateless, prompt-response fashion. Think of GPT-4 or Claude 3 completing a sentence or generating code based on a one-shot instruction. These models are fundamentally predictive text generators. They rely solely on the prompt input and do not persist state across turns unless context windows are artificially extended.

In contrast, Agentic AI is goal-oriented, stateful, and iterative. It is not just about generating content, but about achieving a defined outcome using reasoning, planning, tool usage, and memory. Agentic AI typically wraps around foundational LLMs, augmenting them with planning mechanisms, decision policies, and external tool execution layers. This creates a system that acts like an intelligent software entity with defined roles and objectives.

While Generative AI systems are primarily transformer models accessed via API or fine-tuned endpoints, Agentic AI systems are often multi-component orchestrated stacks. From a developer's lens, understanding the architectural stack is crucial.

This layer maps user intent or high-level objectives into machine-executable goals. It includes semantic parsing, task classification, and sometimes embedding-based mapping. It ensures the agent understands not just the "what" but the "why" of a task.

Planning is where Agentic AI differentiates itself most sharply. This engine is responsible for generating intermediate steps, task graphs, or execution plans. Developers may employ strategies like ReAct (reasoning and acting), Tree of Thought, or Finite State Machines. This module often involves iterative calls to LLMs for decision-making and tree traversal.

Agents must execute on their plans. This is where shell access, RESTful APIs, web browsers, file systems, and cloud deployment tools get integrated. Developers define tool schemas that agents can invoke, including tool selection policies and error handling paths.

Unlike Generative AI, where the session is often stateless, Agentic systems depend on robust memory mechanisms. Developers typically integrate vector databases (like Chroma or Pinecone), SQL-based logging, or Redis-based caches to store task history, knowledge graphs, and action traces.

A feedback mechanism lets the agent assess output validity and refine plans. This could involve auto-evaluation heuristics, scoring functions, human-in-the-loop evaluations, or retry policies based on failure modes. It turns the agent from a static program into an adaptive system.

For developers designing workflows that cannot be resolved in a single inference cycle, Agentic AI is essential. Tasks that span multiple logical steps with variable branching, such as codebase refactoring followed by test generation and deployment, benefit immensely from an agentic approach.

Consider building a CI pipeline agent. It must clone a repo, identify test coverage gaps, generate unit tests, commit changes, and verify the build. This involves dynamic decision-making, multiple subprocess calls, and state transitions. Generative AI fails to persist context or handle asynchronous states effectively here.

In many developer-facing use cases, LLMs must interact with external systems. Whether it is calling APIs, writing to a file system, querying databases, or launching cloud environments, Agentic AI is designed to interface with these systems predictively.

Suppose you are building an internal developer assistant that can scaffold backend services using templates, deploy them to Kubernetes, and expose logs for debugging. This task cannot be handled by Generative AI alone. An agentic system, integrated with tool schemas and action evaluators, can sequence and orchestrate these tasks.

Tasks that unfold over long durations and require system-level persistence are beyond the scope of single-turn generative models. Agentic AI supports agents that remain active, monitor environments, adapt to change, and react over hours or days.

A product roadmap assistant that monitors JIRA tickets, updates OKR dashboards, schedules follow-ups, and alerts based on deadlines is best architected as an agentic system with time-triggered behaviors and persistent memory.

Agentic AI can modify its actions based on conditional logic derived from environment states or prior decisions. This event-driven reactivity is essential for automation workflows.

Imagine a server health monitoring agent. It observes CPU and memory stats, detects anomalies, scales pods via API, and alerts the dev team. This requires active state monitoring, decision branches, and tool invocation. Generative AI lacks the runtime hooks and conditional pipelines necessary.

Agentic systems often incorporate modules for critique and reflection. This allows agents to evaluate their own outputs and refine them. This meta-cognition is infeasible in plain Generative AI.

You might design an agent that generates a Python script, runs it against test data, identifies failing edge cases, and re-generates a patch. The internal validation and feedback-to-generation loop requires agentic coordination.

When tasks are short, deterministic, and self-contained, Generative AI excels due to its latency and simplicity.

Generating boilerplate code, rewriting SQL queries, summarizing a README, or translating documentation are all use cases where the overhead of agents is unnecessary.

In production systems where speed and reliability are paramount, such as customer-facing chat or autocomplete in IDEs, the predictability of generative APIs is ideal.

If you're building a browser extension that provides code snippet suggestions inline, you want minimal inference latency. Agent orchestration in this context would be overkill and introduce lag.

If you’re building your first agentic system, consider the following elements:

LangGraph, AutoGen, CrewAI, OpenDevin, and others offer scaffolds for building agentic applications. Evaluate their support for multi-agent orchestration, tool interfaces, and error handling.

Use Redis or Postgres for structured state, and vector databases like Chroma for unstructured or semantic memory. Ensure TTLs and eviction strategies are clearly defined.

Define robust action schemas with parameter validation and safety constraints. Use OpenAPI or JSON Schema formats for tools. Establish fallback tools or manual override policies.

Instrument your agent with logs, metrics, and traces. Use tools like OpenTelemetry or custom dashboards. This is critical for debugging complex agent failures and retry loops.

Agents interacting with production systems need scoped API access, secure sandboxing, and strict role-based access controls. Use OAuth flows, audit logs, and sandboxed execution layers to mitigate risk.

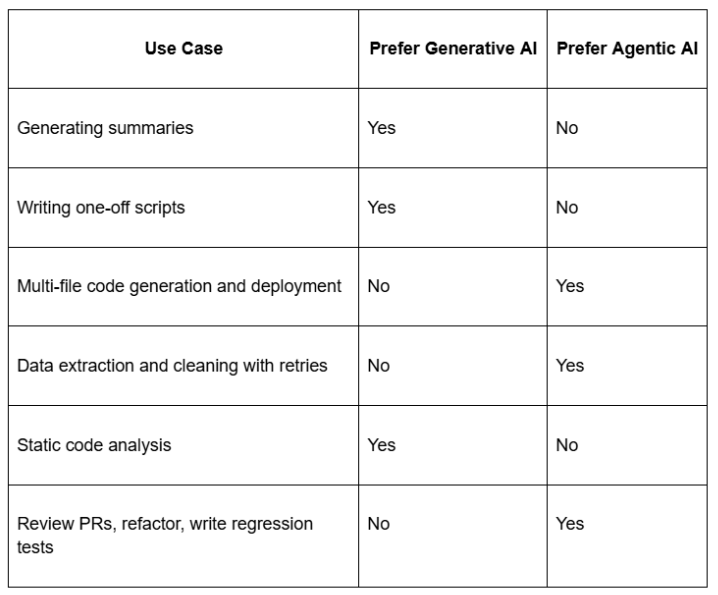

The decision to use Agentic AI over Generative AI should be driven by the nature of the task, not the hype surrounding autonomy. Developers should assess:

Agentic AI unlocks new dimensions in productivity, especially for multi-step workflows, DevOps automation, and software agents. But it requires thoughtful architecture, robust tooling, and observability.

As developers, our goal is not to use the most advanced tool, but the most appropriate one. When purpose dictates complexity, memory, and adaptive planning, Agentic AI is the right fit.