As autonomous application development gains traction in modern software workflows, developers are seeking AI paradigms that can support increasingly complex, adaptive, and goal-driven tasks. While generative AI continues to dominate headlines for its ability to produce code, text, and other content through powerful language models, a more structured and capable alternative is emerging: agentic AI.

This blog aims to offer a comprehensive technical comparison between agentic AI and generative AI, focusing specifically on their capabilities, limitations, and ideal use cases in the context of autonomous application development. Through detailed architectural insights and practical developer-oriented evaluation, we will answer the central question: Agentic vs Generative AI, which is more suited for autonomous application development?

Generative AI refers to machine learning systems that are capable of producing new data artifacts such as code, text, images, or even synthetic data samples based on learned distributions. Most generative AI systems used in software engineering are powered by large language models (LLMs) trained using transformer architectures. These models are designed to map from an input prompt to an output, using patterns derived from large-scale training data.

For developers, generative AI often manifests as tools like GitHub Copilot, ChatGPT, or Replit AI, which can suggest code completions, generate documentation, or scaffold components based on human input. The execution is typically stateless and reactive. Each prompt is processed independently, meaning the model does not retain memory of prior interactions unless explicitly given context in the prompt window.

Agentic AI systems build upon generative models but extend their capabilities with a set of orchestration and planning layers that enable autonomous behavior. An agentic system is characterized by its ability to maintain state, formulate subgoals, interact with external tools and APIs, and execute sequences of actions toward a defined objective. Rather than being limited to one-shot prediction, agentic AI operates iteratively within feedback loops that resemble classical AI planning systems.

In an autonomous development workflow, an agentic AI might clone a repository, analyze the project structure, identify missing features, generate code for a new service, test it using an external test runner, debug it based on logs, and finally commit and deploy the code. These agents can dynamically decide what action to take next based on evolving context, leveraging both memory and tool integration.

Generative AI systems process each request in isolation. For example, asking a generative model to generate a test suite for a class will yield outputs based solely on the prompt context. If you follow up with a second prompt to fix an error or expand the coverage, the model will not remember the previous exchange unless you explicitly include prior context. This stateless nature restricts the model from autonomously managing tasks that require persistence, long-term strategy, or interdependencies.

Agentic systems are designed to be stateful. They maintain memory structures across multiple steps, enabling them to track progress, recall previous decisions, and avoid redundant work. Memory in agentic systems may be short-term (local context window), long-term (external memory modules), or hybrid. This stateful execution allows for complex task flows such as building modular codebases, tracking implementation across files, or debugging interrelated modules.

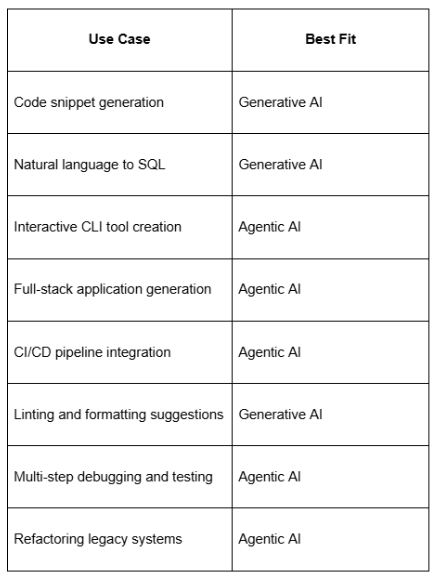

Generative AI systems excel at executing isolated tasks defined explicitly through prompts. They do not plan, iterate, or correct themselves unless prompted to do so. This makes them effective for well-scoped development tasks, such as generating a React component, translating code, or producing documentation. However, they struggle with open-ended tasks that involve uncertainty, incomplete specifications, or conditional branching.

Agentic systems incorporate planning mechanisms that allow them to break down abstract goals into actionable subtasks. For example, if given a prompt to "add a user authentication system," an agentic AI can determine the need for a login route, user database schema, JWT-based auth middleware, error handling, and test coverage. Agents can dynamically replan when encountering roadblocks, which mirrors how a human developer would navigate ambiguous or iterative requirements.

Planning capabilities are typically implemented using behavior trees, task graphs, or hierarchical goal structures. The agent’s execution loop includes decision points that evaluate current progress and adjust strategies accordingly. This enables autonomous operation across multiple levels of abstraction.

Generative AI can suggest code that interacts with tools or APIs, but it does not execute these interactions natively. For instance, it can generate a curl command or Kubernetes deployment script, but it cannot validate, run, or debug them unless a human developer does so manually.

Agentic AI is inherently tool-aware. These systems are integrated with toolchains such as file systems, shells, version control systems, linters, and CI/CD platforms. They can invoke tools, handle outputs, perform retries, and interpret logs. In more advanced systems, tools are registered in a dynamic action space and are selected based on the agent’s plan and environment state.

This active tool usage makes agentic AI uniquely capable of real-world execution. For example, an agent could detect a failing test, trace the error to a faulty function, regenerate the function, rerun the test suite, and only commit changes upon success.

Generative AI outputs are deterministic with respect to the input and model randomness. If an error exists in the output, it will remain until the prompt is refined. While some level of prompt chaining or CoT (Chain of Thought) prompting can mitigate this, the model does not inherently recognize or fix errors across executions.

Agentic AI is explicitly designed to be adaptive. Through the use of control loops, agents monitor the success or failure of their actions and adjust accordingly. This feedback-awareness allows agents to self-correct, refactor failing logic, and optimize their workflows over time. Mechanisms like execution scoring, success thresholds, and reward models can be integrated to evaluate progress and trigger replanning.

From a development perspective, this resilience is vital. When integrating with legacy codebases, managing multi-service architectures, or deploying to dynamic environments, the ability to learn from failure and adapt autonomously is a defining requirement.

Generative AI systems are well-suited for fine-grained generation tasks such as writing individual functions, generating SQL queries, or scaffolding single-page components. However, they falter when tasked with producing or managing coherent, interdependent systems.

Agentic AI scales across levels of abstraction. An agent might generate project scaffolds, integrate authentication flows, connect frontend and backend modules, seed databases, and deploy applications—all within a consistent architectural vision. This system-level synthesis requires the agent to understand project structure, coding conventions, runtime requirements, and testing practices in aggregate.

For developers building multi-module applications, monorepos, or microservices, agentic AI offers a more scalable and integrated approach.

Hybrid systems are also viable. A generative model can be embedded as a sub-component within an agentic system, where it serves as the code generation engine for a planning agent that handles orchestration and verification.

These frameworks reflect the maturity gap between generative and agentic AI in terms of tooling, observability, and reliability. Agentic systems are still early in ecosystem support but offer significantly broader functional scope.

Building agentic AI systems introduces challenges around task decomposition, memory management, error recovery, and observability. Agents require robust action schemas, retry policies, and context isolation mechanisms. Testing becomes more intricate, since outcomes depend on dynamic tool outputs, state transitions, and environmental conditions.

Moreover, debugging agentic workflows requires tracing execution paths across multiple layers, including planner decisions, tool invocations, and memory states. These systems demand rigorous logging, checkpointing, and simulation environments to ensure predictable behavior.

From an engineering standpoint, investing in agentic architecture means embracing complexity for the sake of capability. It requires principles from software architecture, reinforcement learning, symbolic planning, and systems engineering.

While generative AI is a powerful tool for augmenting individual development tasks, it falls short when developers demand autonomy, feedback integration, and full-system orchestration. Agentic AI, with its emphasis on memory, planning, tool interaction, and adaptive execution, provides a more complete foundation for autonomous application development.

For developer teams working on IDE extensions, autonomous devtools, or continuous deployment pipelines, agentic systems are not just more powerful, they are structurally aligned with the requirements of real-world software engineering.

In the debate of Agentic vs Generative AI, for any task beyond isolated code generation, the agentic paradigm stands out as the more capable and sustainable choice.