As the AI ecosystem rapidly advances, developers find themselves navigating a shifting landscape of capabilities, tools, and architectural patterns. Generative AI has already become deeply embedded in the software development process, from assisting with autocomplete and documentation to writing code and test cases. However, a new class of AI systems has started to gain momentum in 2024 and 2025, one that extends beyond generation and into execution, autonomy, and iterative reasoning. These systems are often referred to as Agentic AI, and they represent a major evolution in the design and deployment of intelligent automation.

This blog unpacks the technical distinctions between Agentic AI and Generative AI, analyzes their architectural underpinnings, and provides developers with a practical understanding of how to leverage these systems to automate complex workflows. If you are building AI-native products, integrating AI in your backend, or simply optimizing your development workflow, understanding this shift is critical for future-proofing your approach.

Generative AI refers to a class of artificial intelligence systems that are designed to produce original outputs by learning from patterns within large-scale training datasets. These models are primarily trained using unsupervised or self-supervised learning techniques on enormous corpora of text, code, images, or audio. The most common architectures used in generative AI today are transformer-based large language models, including GPT, Claude, LLaMA, and Mistral.

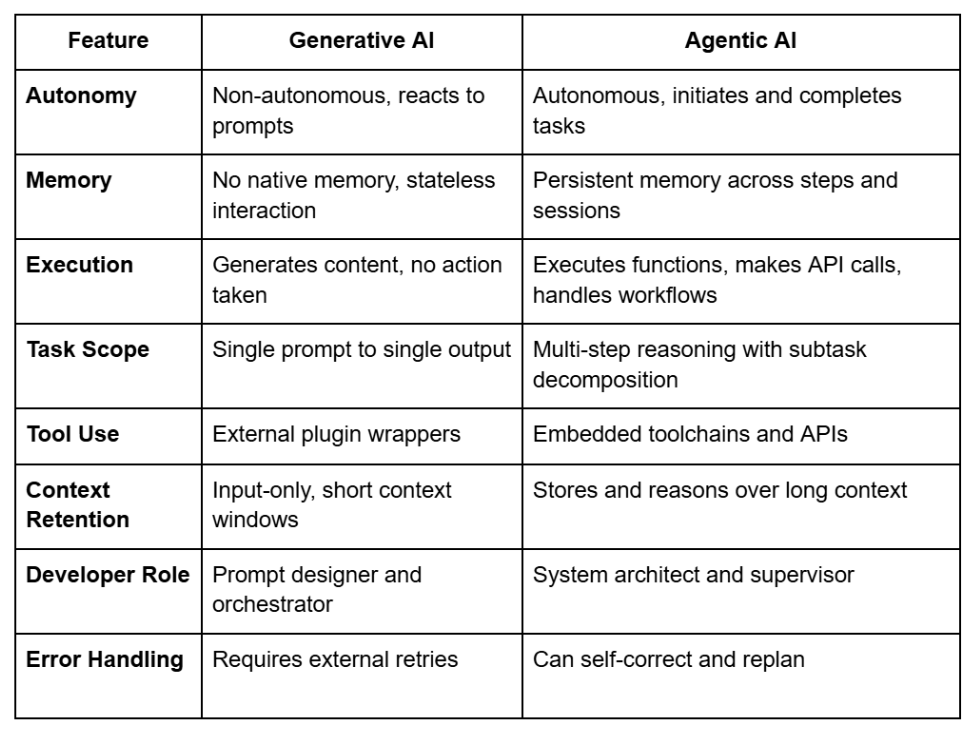

Generative AI models are often described as stateless, prompt-based, and reactive, meaning they operate on the basis of an input prompt and do not maintain an internal representation of long-term goals, memory, or task state across multiple invocations. They are optimized for single-shot or few-shot inference and typically operate in isolated execution loops.

Generative AI models are designed to function as language processors that generate output based solely on the input prompt they receive. There is no inherent memory or context persistence between one interaction and the next unless such state is explicitly managed through session variables or external memory components provided by developers. This makes these models reactive in nature, lacking the ability to proactively pursue a goal unless repeatedly instructed by a user or an external orchestrator.

These systems function in single-turn or few-turn modes, meaning they take a prompt, generate an output, and terminate. While you can simulate multi-turn behavior using conversational memory mechanisms, the model itself does not natively maintain state. For example, a Generative AI model can generate a function to sort an array when asked, but it will not recall that the same array was part of a broader data transformation pipeline unless context is supplied again.

Generative AI models do not possess the capacity to act autonomously. They generate content in response to explicit instructions and lack any intrinsic mechanisms for goal formulation, execution control, or self-correction. This means that while they are highly capable within bounded contexts, they require extensive orchestration to function as part of a larger system or workflow.

Generative AI has found widespread adoption across the software engineering domain, including:

From a developer's perspective, Generative AI is best thought of as an enhancement layer. It augments the developer experience but does not fundamentally alter the architecture or process of building software. The human developer remains at the center of control, with the model acting as a powerful assistant, not an autonomous executor.

Agentic AI represents a paradigm shift in artificial intelligence where systems are no longer confined to generating outputs in isolation. Instead, Agentic AI models are embedded within a broader autonomous framework that allows them to take actions, reason through tasks, and execute sequences based on predefined or emergent goals.

The term "agentic" in this context refers to the capacity of an AI system to act as an agent, capable of initiating, monitoring, and completing tasks without constant human intervention. These systems are built using combinations of foundation models, planning algorithms, long-term memory systems, tool use interfaces, and control loops. The result is an AI that can pursue goals through multiple iterations, adapt based on feedback, and interact with external systems to perform real work.

Unlike Generative AI, which responds to short prompts, Agentic AI operates around the concept of objectives or tasks. Given a high-level goal, the system decomposes it into actionable steps, prioritizes them, and executes them sequentially or concurrently. This introduces notions of strategic reasoning, temporal planning, and long-term memory which are absent in conventional LLM setups.

Agentic AI frameworks enable an iterative loop of reasoning, execution, and evaluation. An agent can attempt a solution, assess whether it meets its goal, and modify its approach accordingly. This is a critical feature for handling real-world developer workflows, which often involve error handling, retries, and adjustments based on runtime feedback.

Agentic systems are typically integrated with APIs, system calls, shell environments, databases, and cloud providers. These integrations are made possible through function-calling capabilities, tool routers, or structured action schemas. For example, an agent can decide to call a GitHub API to fetch a pull request, analyze its diff, comment with a review, and merge it, all without human intervention.

Agentic AI architectures leverage memory modules to store intermediate results, environmental variables, previous execution states, and long-term preferences. This enables them to make context-aware decisions over the course of long-running sessions. Memory can be handled via vector stores, key-value stores, or custom JSON-based memory graphs.

Agentic AI redefines what it means to build software by enabling developers to move from writing code to orchestrating intelligence. Developers can now build systems where agents generate, validate, test, and deploy code autonomously. This significantly reduces time-to-production for certain classes of applications and increases the level of abstraction at which developers can operate.

Instead of being restricted to one-shot prompts, Agentic AI frameworks allow you to define multi-step pipelines where agents take high-level directives and handle the entire execution. For example, given the instruction to "build an Express.js server with CRUD APIs for a product catalog", the agent can:

Agentic agents can be integrated into developer tools such as Visual Studio Code, JetBrains IDEs, or cloud-native platforms. With access to GitHub repositories, CI/CD configurations, and test frameworks, these agents become co-developers capable of modifying files, triggering pipelines, and analyzing results in real time.

Agentic frameworks offer developers the ability to define logic around how agents should think, retry, or recover from errors. You can define control flows, conditional branches, and recovery strategies using graph structures or YAML configuration:

yaml

CopyEdit

- if: test_results.failures > 0

do: retry_test_generation

- else:

do: proceed_to_deployment

In more advanced setups, developers can define teams of agents with distinct roles. For instance, one agent can be responsible for backend architecture, another for writing frontend components, and a third for testing and verification. These agents can share context, assign subtasks, and work concurrently to complete complex projects.

LangGraph is a Python-based framework that allows you to build agent systems using directed graphs. Each node represents a function, an LLM call, or a control operation. This is particularly useful for building deterministic agent pipelines with branching logic and rollback states.

AutoGen enables multi-agent systems where agents communicate via structured messages. It provides abstractions for role-based collaboration and supports memory injection, tool usage, and conversation control.

CrewAI provides a clean abstraction for defining roles, objectives, and communication patterns between multiple agents. It is designed to be readable, extendable, and flexible, making it ideal for fast prototyping of agent teams.

GoCodeo is a full-stack AI coding agent built specifically for developers. It integrates ASK, BUILD, MCP (Multi-agent Code Planning), and TEST stages into a unified flow. It works directly inside Visual Studio Code and supports tools like Supabase and Vercel, allowing developers to build, test, and deploy applications using agentic automation without leaving their IDE.

As Agentic AI matures, the role of developers will shift from code authoring to workflow design, agent supervision, and infrastructure orchestration. The ability to define goals and delegate execution to intelligent agents will make software development significantly faster and more modular.

Expectations for the next wave include:

The evolution from Generative AI to Agentic AI marks a fundamental transformation in how developers interact with software systems. While Generative AI unlocked creativity and efficiency, Agentic AI introduces decision-making, planning, and action, offering developers a new layer of abstraction to design intelligent automation. Understanding the difference between these paradigms is essential for developers who want to stay at the forefront of AI-driven engineering in 2025 and beyond.

Whether you're building internal developer tools, integrating AI agents into production systems, or experimenting with autonomous pipelines, embracing agentic design patterns is no longer optional — it is the logical next step in the AI software development lifecycle.