Software engineering is rapidly transitioning from static automation scripts to intelligent, context-aware systems. Traditional CI/CD pipelines, while foundational, are increasingly unable to cope with the complexity and velocity of modern product development cycles. In this context, agent-driven pipelines offer a new paradigm. These pipelines are driven by a coalition of autonomous, goal-oriented AI agents that interpret high-level intents and convert them into executable development workflows. Unlike their traditional counterparts, these pipelines are dynamic, feedback-driven and adaptive to both application and infrastructure changes.

This blog aims to provide a comprehensive, developer-centric look into agent-driven pipelines. We will explore their internal architecture, component breakdown, operational flow and real-world integration scenarios. Readers can expect deep dives into how AI agents are coordinated for full-stack app development, deployment and maintenance.

Agent-driven pipelines are intelligent development pipelines orchestrated through autonomous AI agents, each with a specialized function. These agents operate collaboratively, forming a distributed multi-agent system that manages tasks across the entire software development lifecycle, including requirement parsing, code generation, validation, testing, version control, infrastructure provisioning and deployment.

Each agent in the pipeline is fine-tuned for a particular role, such as planning, coding, testing or deploying. These agents operate independently but maintain shared context through centralized memory, vector stores or external APIs. This modularity allows for more fault-tolerant and scalable systems.

Agents are not stateless function executors. They maintain deep awareness of project structure, system dependencies and previous conversations. This context persistence allows them to modify complex codebases without regression.

Each pipeline stage incorporates evaluation and feedback loops. For example, after code is generated, a test agent may evaluate it against a suite of unit and integration tests. Based on the results, the build agent may re-attempt code generation with refined constraints.

Agent pipelines typically rely on Abstract Syntax Tree (AST) manipulation or Language Server Protocols (LSP) to generate and refactor code. This allows them to work across multiple programming languages and frameworks, enabling true cross-stack automation.

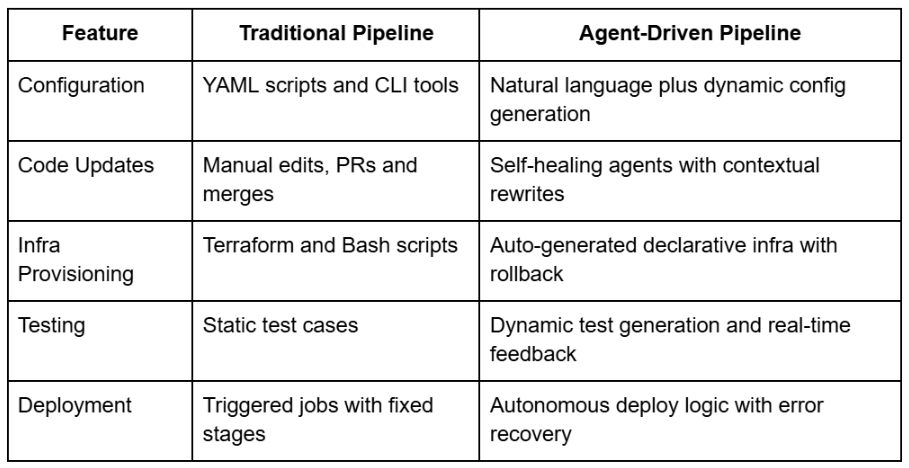

In a traditional DevOps pipeline, development cycles are gated by the time taken for code review, testing, staging and deployment. Manual interventions such as setting up CI jobs, managing environment variables and provisioning infrastructure introduce significant delays.

Agent-driven pipelines bypass these limitations through real-time feedback and parallel execution. For instance, while one agent constructs backend routes, another agent might concurrently generate database schema definitions. Combined with automated testing and immediate deployment readiness, this model compresses dev cycles from days to minutes.

Traditional pipelines often require bespoke scripts tailored for each language or environment. In contrast, AI agents working at the AST level or using fine-tuned language models can interpret and manipulate multi-language codebases. For example, an agent generating a full-stack application may simultaneously produce React components, Node.js APIs and SQL schema migrations. The system understands how changes in one layer affect others and adapts accordingly.

Infrastructure provisioning is one of the most error-prone aspects of software deployment. Agent-driven pipelines mitigate this through Infrastructure-as-Code (IaC) generation. An infra agent can dynamically produce Terraform, Pulumi or CloudFormation scripts to set up databases, compute instances, storage or networking components. These agents can even validate the output through dry runs, policy compliance checks or state comparisons.

Agents use vector databases or external memory systems to maintain context over long-running sessions. This includes design decisions, architectural trade-offs, schema evolution and dependency versions. For example, if a database schema changes midway through development, the agent can re-propagate those changes across related API endpoints, validation logic and test cases. This results in a cohesive, synchronized codebase that evolves holistically.

The starting point of an agent-driven pipeline is the ingestion of requirements, typically through natural language. Developers or product owners can input tasks such as, "Build a REST API for project management with Supabase backend and Vercel deployment." The Planner Agent parses this input into a structured specification.

features:

- REST API with authentication

- PostgreSQL via Supabase

- React frontend UI

- CI/CD with Vercel

This intermediate representation is then passed downstream to other agents for further processing.

The Builder Agent receives the structured plan and proceeds to generate:

Agents operate across file boundaries, maintain AST coherence and generate modular, readable code with comments and inline documentation.

At critical stages such as test completion, major refactors or deployment readiness, agents record checkpoints. These checkpoints contain the project state, file diffs, system logs and rollback instructions. MCP enables the following:

For instance, after integrating OAuth, a test agent might detect a failure in the authentication middleware. Using MCP, the system can revert to the last successful checkpoint and retry with alternate logic.

The Critic Agent is responsible for evaluating code quality, logical correctness and test coverage. It performs static code analysis using linters, runs pre-defined and AI-generated test suites and checks for compliance with architectural constraints.

For example:

Running tests...

22 passed, 3 failed

Failure in POST /projects route: Missing status code validation

The agent then tags the relevant sections of code, submits feedback and optionally invokes the Builder Agent for self-healing edits. This feedback loop continues until success criteria are met.

Once the codebase passes tests and validations, the Deployer Agent triggers provisioning and deployment. This may involve:

Configuration Example:

ci_pipeline:

runner: github-actions

stages:

- lint

- test

- deploy

provider:

platform: vercel

rollback: true

GoCodeo is a developer-first platform that integrates a multi-agent architecture directly into your local development environment, specifically inside Visual Studio Code. With modules like Ask, Build, Checkpoint and Test, GoCodeo offers a seamless, AI-augmented pipeline that goes from requirements to deployment with minimal human intervention.

The entire process can execute in minutes, enabling developers to offload repetitive tasks and focus on logic, architecture and business goals.

Since agents have access to sensitive environments and can modify production code, they must operate within strict access controls and sandbox environments. Role-based access control (RBAC), least privilege policies and cryptographic signing should be enforced.

AI-generated code and infra should be auditable. This means every agent action must be logged with intent, result and delta change. Developers should be able to trace a deployment back to its requirement prompt.

Agents must integrate with existing ecosystems, including Git, Docker, Kubernetes, monitoring stacks like Prometheus and CI/CD services. Compatibility is critical for incremental adoption.

As agent frameworks mature, we anticipate:

Agent-driven pipelines are not just a productivity enhancement, they are a systemic shift in how software is built, tested and shipped.

Agent-driven pipelines represent a transformative leap for software engineering. By decentralizing the responsibilities traditionally managed by developers and DevOps engineers, these systems enable faster iteration, higher code quality and more resilient infrastructure management. Whether you are working on enterprise-scale microservices or bootstrapping a startup MVP, integrating agent-driven workflows will fundamentally change how your team delivers software.

For developers, these pipelines mean spending less time on boilerplate and more time solving meaningful problems. For organizations, they promise reduced time-to-market, higher reliability and better scalability.

The era of agent-native development is already here. The only question is, how soon will you adopt it?